Why Immersive and Voice Interfaces Are the Next UX Frontier

Talking on your phone in public seemed strange and futuristic ten years ago. Asking Alexa to turn off the lights before bed or asking Siri to set a timer while cooking feels natural these days. Similar to this, it has become more and more common to hold up your phone to see how a couch will fit in your living room or to put on a headset to tour a virtual home before purchasing it. Voice and immersive interfaces have quietly moved from novelty to necessity.

This shift is being driven by convenience, accessibility, and changing user expectations. Voice allows hands-free operation in moments when fingers are tied up, while immersive interfaces let us understand complex spatial environments better than a flat screen ever could. Both technologies also open doors for people with disabilities, providing ways to interact with the digital world that were previously limited or impossible.

The question for product teams is no longer if these technologies matter but when and how to integrate them. This article explains why voice and immersive interfaces are the next big thing in user experience, what design principles should be applied, and how to start getting your product ready for this future.

Table of Contents

Why Voice and Immersive Interfaces Matter

Traditional graphical user interfaces (GUIs) have been the backbone of digital interaction since the birth of the personal computer. They rely heavily on screens, keyboards, and touch inputs. But GUIs reach their limits in real-world scenarios. Imagine driving and needing to adjust your navigation route. A screen-based interface demands eyes and hands that are already occupied. Voice solves this by allowing you to simply say, “Find a faster route.” In these hands-busy situations, voice user interfaces provide a more convenient way to interact. This shift in human computer interaction marks the emergence of voice as a new form of interaction, moving beyond traditional input methods.

Accessibility is another area where GUIs often fail. Swiping or tapping may be impossible or very difficult for users with motor impairments. Reading menus on a screen presents challenges for those who are visually impaired. While immersive technologies, such as virtual reality, produce experiences that rely more on spatial understanding and less on text-heavy interfaces, voice interaction eliminates these barriers. For example, a blind person could shop online more efficiently than looking at still images if they were able to navigate a virtual grocery store with audio feedback.

Beyond practicality, a change in culture is taking place. Natural, context-aware interactions are now expected by users. The younger generation talks to devices as casually as to friends, while immersive games like Fortnite and VR fitness apps make spatial environments second nature. Voice interfaces represent a new form of human computer interaction, expanding the ways people engage with technology. A 12-year-old today may find scrolling through static images of furniture less intuitive than dropping a 3D couch model into their living room with AR.

Current Use Cases and Industry Examples

Immersion and voice technologies are not merely ideas of the future. They are present and integrated into sectors that prioritize accessibility and efficiency.

In healthcare, surgeons use voice commands to pull up patient records mid-operation, keeping their hands sterile and focused. Voice user interfaces have been created to allow hands-free access to patient data, improving workflow and safety. Nurses dictate notes into systems instead of typing, using voice to write or compose messages and records, reducing paperwork and freeing more time for patient care. In automotive design, Tesla and other manufacturers are weaving voice into cars so drivers can adjust settings without distraction.

Finance, an industry known for conservative adoption, has embraced voice through services like Capital One’s Alexa integration, letting users check balances or pay bills conversationally. Meanwhile, at home, smart speakers like Google Nest are now household staples, controlling lights, thermostats, and appliances with a few spoken words. These devices also enable users to make phone calls using voice commands, further expanding their utility.

Immersive interfaces are just as pervasive. IKEA’s AR app lets shoppers see how furniture fits in their homes, addressing the age-old uncertainty of whether a couch will overwhelm a room. In education, VR simulations help medical students practice surgeries without risk. In aviation, pilots train in immersive cockpits before ever touching a real aircraft. Retailers like Nike use AR mirrors in stores so customers can virtually try on shoes, combining novelty with practical decision-making.

These examples are more than experiments. Usage statistics show steady adoption. Amazon reported over 500 million Alexa-enabled devices in use worldwide, and AR filters on Snapchat and Instagram are engaged with billions of times daily. The growing number of devices that support voice user interfaces demonstrates the expanding reach of this technology. This is evidence that behaviors are not only forming but sticking.

The Role of Voice in Interaction

Voice has rapidly become a primary way for users to interact with technology, especially as our lives become busier and more connected. Voice user interfaces allow people to control devices, search for information, and complete tasks simply by speaking. This hands-free approach is invaluable in situations where using a screen or keyboard isn’t practical, think of adjusting your smart home thermostat while cooking, or asking your virtual assistant to play music while driving.

Smart speakers like Amazon Alexa and Google Assistant have brought voice commands into millions of homes, making it second nature to say, “Turn on the lights,” or “What’s the weather today?” Voice interactions are also transforming how we communicate with our phones, cars, and even appliances. The convenience and speed of speaking, rather than typing or tapping, means that voice user interfaces are often the most intuitive choice for everyday life.

As voice technology continues to evolve, it’s not just about issuing commands. It’s about creating a more natural, conversational way for humans to interact with computers, bridging the gap between digital systems and the way we communicate in the real world.

Key Design Principles for Voice & Immersive UX

Designing for voice and immersion requires a fundamental mindset shift. A voice-first approach begins with rethinking tasks in terms of conversation. Instead of a user pressing five buttons to book a meeting, the interaction becomes, “Schedule a call with Sarah for 2 PM tomorrow.” Designers must anticipate natural speech patterns, slang, and even pauses. Designing for these systems means focusing on natural communicating between users and technology, making interactions feel more like human conversation than issuing commands.

Context-awareness is equally critical. Voice interfaces should shine in situations where screens are impractical: while cooking, driving, or exercising. An AR application should step in when a spatial understanding is required, such as visualizing a new office layout.

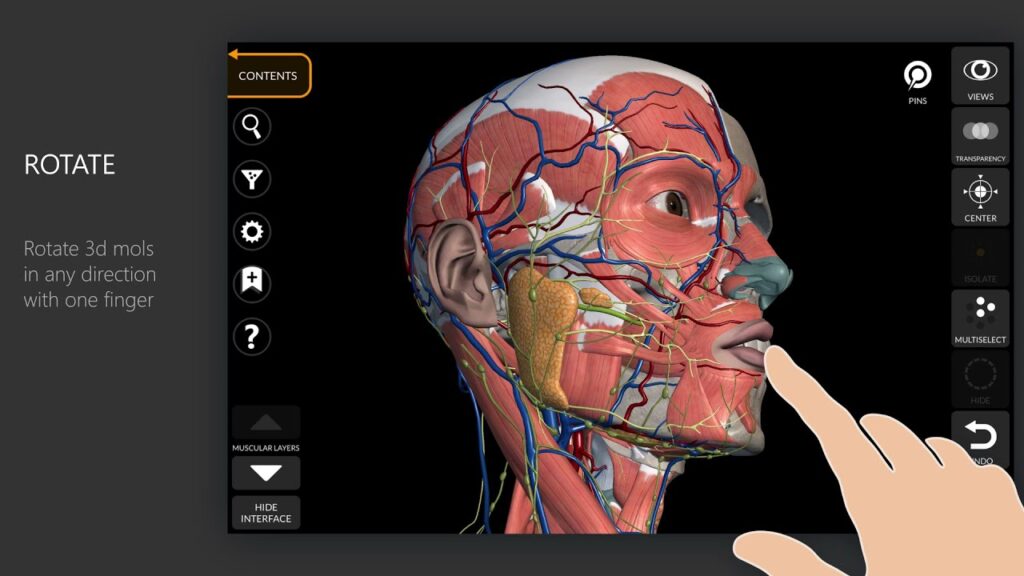

Spatial design is central to immersive experiences. A 3D anatomy app, for example, allows medical students to explore organs in ways flat diagrams cannot. Designing these experiences requires thinking not just about what the user sees but how they move and interact within a space.

Natural dialogue is another cornerstone. Voice systems must handle ambiguity gracefully. If a user says, “Play my playlist,” the system should clarify: “Do you mean the jazz playlist or the workout playlist?” By confirming actions or offering simple prompts, feedback loops ensure that users never feel lost.

Spatial design is central to immersive experiences. A 3D anatomy app, for example, allows medical students to explore organs in ways flat diagrams cannot. Designing these experiences requires thinking not just about what the user sees but how they move and are interacting within a space.

Natural dialogue is another cornerstone. Voice systems must handle ambiguity gracefully. If a user says, “Play my playlist,” the system should clarify: “Do you mean the jazz playlist or the workout playlist?” By confirming actions or offering simple prompts, feedback loops ensure that users never feel lost. A timely and clear response from the system is essential for maintaining a smooth conversational flow.

Feedback loops are crucial for user confidence. Systems should acknowledge commands, confirm actions, or ask for clarification when needed. Text to speech technology enables these systems to provide spoken responses, making the interaction more accessible and engaging.

User Expectations

Today’s users expect more from their devices than ever before. With the rise of voice user interfaces and immersive technologies, people anticipate that their interactions will be as smooth and natural as talking to another person. Users expect devices to understand not just words, but intent, context, and even emotion. Whether it’s making a phone call, searching for information, or controlling smart home technology, users want systems that respond quickly and accurately to their voice.

This shift in expectations is especially pronounced among younger generations, who have grown up with virtual assistants

like Apple Siri and Google Assistant. For them, speaking to technology is as normal as sending a text. Users now expect their devices to support natural language, handle complex requests, and provide helpful responses without the need for rigid commands or menus.

Meeting these expectations means designing voice user interfaces that are intuitive, responsive, and capable of understanding the nuances of human communication. As technology advances, the bar for what users consider “normal” will only continue to rise.

Accessibility and Inclusion

Voice user interfaces and immersive technologies are powerful tools for making digital experiences more accessible and inclusive. For individuals with visual impairments, voice recognition and speech synthesis open up new ways to interact with apps and devices, removing the barriers posed by traditional graphical user interfaces. Instead of relying on sight or precise motor control, users can simply speak to navigate, search, and complete tasks.

For example, someone with limited mobility can use voice commands to control smart home devices, send messages, or access information without needing to touch a screen. Speech recognition technology also supports users with dyslexia or other learning differences, allowing them to interact with content in a way that suits their needs.

Designing voice user interfaces with accessibility in mind ensures that technology serves everyone, not just the majority. By prioritizing inclusive design, companies can create products that empower all users, regardless of their abilities or circumstances.

Speech Recognition Technology

At the heart of every voice user interface is speech recognition technology. Automatic speech recognition (ASR) systems convert spoken words into text, enabling devices to understand and respond to voice commands. Thanks to advances in machine learning and artificial intelligence, today’s speech recognition systems are more accurate and reliable than ever before.

Modern ASR can handle a wide range of accents, dialects, and languages, making voice interfaces accessible to a global audience. However, challenges remain—background noise, overlapping speech, and unique speech patterns can still cause errors. Developers must continually refine their systems to ensure that users are understood, even in less-than-ideal conditions.

The integration of speech recognition into everyday devices, from smartphones to smart speakers, has made voice technology a seamless part of daily life. As the technology continues to improve, users can expect even faster, more accurate responses to their spoken input.

Natural Language Understanding

Natural language understanding (NLU) is what allows voice user interfaces to move beyond simple command recognition and engage in real conversations. By leveraging natural language processing, these systems can interpret the meaning behind a user’s words, understand context, and respond appropriately. This is what makes it possible for a virtual assistant to handle requests like, “Remind me to call Mom when I get home,” or, “Book a table for two at my favorite Italian restaurant.”

Designing effective conversation flows is crucial for creating a smooth user experience. Voice interfaces must be able to manage ambiguity, ask clarifying questions, and remember previous interactions to provide relevant responses. The goal is to make the interaction feel as natural and intuitive as possible, so users feel understood and supported.

As NLU technology advances, voice user interfaces will become even more capable of understanding complex requests, managing multi-turn conversations, and adapting to individual user preferences. This will unlock new possibilities for how we interact with technology in every aspect of our lives.

How to Assess Your Product’s Readiness

Teams must determine whether voice or immersion makes sense before jumping right into implementation. Map out the most frequent tasks for your product first. Which of these could be a natural spoken command but feel awkward on a screen? Which ones need to be visualized spatially?

Technical and environmental constraints matter. A voice feature in a noisy warehouse may fail without noise-canceling microphones. An AR app may frustrate users with older phones that lack processing power. Even lighting conditions can affect AR accuracy.

Accessibility audits also reveal opportunities. Ask: who is excluded by our current design? A travel booking app that requires constant scrolling may overwhelm users with visual impairments. Adding a voice-based booking flow could open the experience to a broader audience.

Practical Steps to Start Implementing

The best entry point is small, low-risk experiments. Conduct an internal audit to see which of your app’s interactions could be converted to voice. A food delivery app, for instance, could prototype a feature that lets users reorder their “usual” meal through a quick voice command. Voice recognition can also be used to fill out forms or complete online transactions, allowing users to interact with web forms and automate data entry through speech.

Prototyping is crucial. Build small AR or VR demos and test them with real users, not just in lab conditions. Watching someone struggle or thrive with an immersive prototype will reveal insights you cannot predict.

Multi-modal interfaces are often the sweet spot. For example, in a fitness app, a user might say, “Start my 20-minute HIIT workout,” while still glancing at the screen for visual progress bars. Voice and visuals should complement, not compete.

Fallback flows are just as important. If a voice system cannot understand a request, it should gracefully hand the user back to touch or text input. In a similar vein, the app should provide static previews if bad lighting prevents AR from working.

Challenges, Trade-Offs, and Mistakes to Avoid

Designing effective voice and immersive user experiences is a significant challenge, as it involves overcoming various obstacles such as system errors, user privacy concerns, and managing user expectations. Errors in voice recognition can quickly irritate users. Early attempts at voice dictation are a prime example, where misunderstandings produced amusing but ineffective outcomes. Even today, accents, dialects, or background noise can trip up systems.

Environmental issues are equally thorny. A warehouse worker shouting into a voice system amid machinery noise or a student trying to use AR in a dimly lit classroom may face barriers. Additionally, voice recognition systems often rely on physical voice characteristics to distinguish between users, and can even detect certain health conditions, adding another layer of complexity to the challenge. Designing for these realities requires careful testing across contexts.

Overloading users with features they don’t need is one of the biggest mistakes. You shouldn’t add voice just because you can. For example, it is awkward and impractical to require users to speak commands in a quiet library setting. Likewise, requiring AR when a picture would be adequate runs the risk of offending users.

Finally, complexity is the enemy. The best voice and immersive experiences feel seamless and intuitive. If users need a manual to understand how to talk to your system, the design has failed.

Future Trends & What to Watch

The pace of advancement in natural language processing means that future voice systems will understand intent, tone, and even emotion more effectively. Imagine a customer support bot that can detect frustration in a user’s voice and escalate the issue to a human before the customer explicitly asks.

Hardware is also catching up. AR glasses are on the cusp of mainstream adoption, promising lightweight, stylish alternatives to bulky headsets. As devices become more capable, immersive experiences will feel less like tech demos and more like everyday utilities.

The future is multimodal. Users will move fluidly between voice, gestures, visuals, and spatial environments. Think of walking into a smart home where lights adjust with a voice command, music shifts with a hand gesture, and AR glasses display reminders as you move.

But responsibility comes along with advancement. Sensitive information is gathered by voice and immersive interfaces, including spatial maps of homes and speech patterns. Businesses need to get ready for more stringent laws and moral scrutiny. Being open and honest about how data is used will set you apart from the competition.

Conclusion

Voice and immersive interfaces are no longer optional experiments; they are fast becoming core expectations of modern user experience. Their ability to solve real-world problems, improve accessibility, and match evolving cultural norms means they will define the next era of digital interaction.

The path forward does not require massive, risky investments. Start small. Use voice or immersion to reimagine a single feature or task in your product. Develop, test, learn, and improve. By doing this, you set up your product for a time when seamless, natural interaction will be expected rather than a luxury.

Conversations, environments, and experiences that seem as natural as conversing with a friend or moving through a room are what the future of user experience (UX) is all about. Your product will be in a better position to meet users where they are going if you begin experimenting sooner rather than later.

Get a UX & CRO Expert’s Eyes on Your Website. Book a free 30-minute UX Teardown and get actionable insights on what’s costing you conversions — no fluff, just fixes you can implement right away.

Book a Free UX Audit

Related posts

AI Won’t Kill UX, Our Habits Will: Why Designers Must Stay Intentional

Let’s be honest: AI isn’t ruining UX. We are. Not on purpose but through habits we don’t always notice. We […]

5 Common Mistakes That Kill Form Conversions + How To Avoid Them

Form conversion is a crucial metric for any business aiming to turn visitors into leads and customers, and it is […]

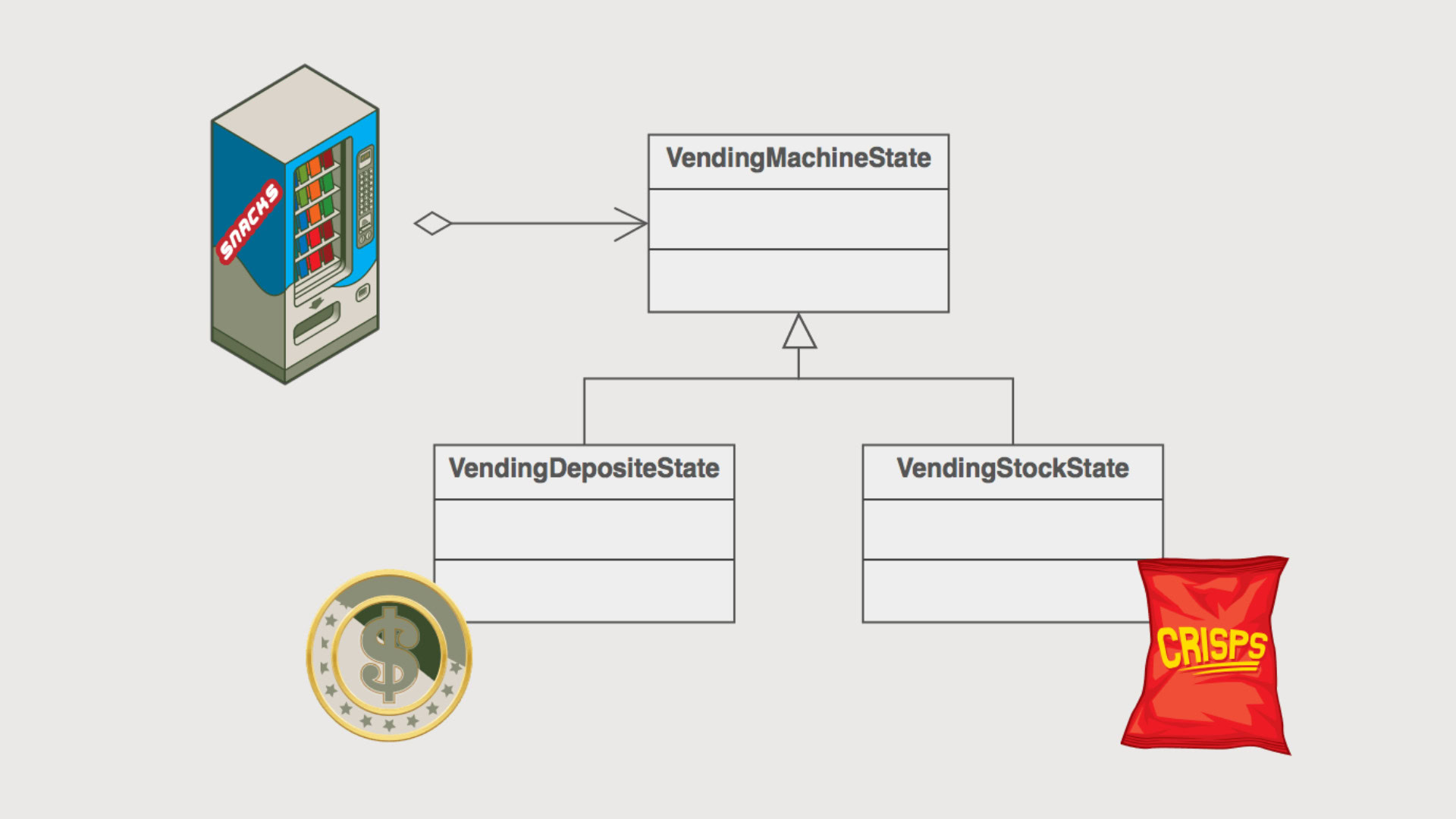

State Pattern: The Key to Seamless and Predictable User Experiences

Every digital experience we engage with daily, whether checking messages, streaming music, or shopping online, hinges on one crucial factor: […]

Creative product design that gets results

Take your company to the next level with world class user experience and interface design.

get a free strategy session