How Leading Brands Are Using Voice User Interfaces: Insights and Learnings

Voice User Interfaces (VUI) are transforming the way consumers interact with technology. As the capabilities of voice recognition and natural language processing (NLP) have advanced, the adoption of Voice User Interfaces has accelerated across various industries. This shift is not just a technological evolution but a fundamental change in user experience design, driven by the increasing demand for hands-free, intuitive, and efficient modes of interaction. This article explores how leading brands are leveraging Voice User Interfaces, delving into their strategies, implementations, and the valuable insights gained from their experiences.

Table of Contents

Understanding Voice User Interfaces

Voice User Interfaces enable users to interact with systems through voice commands. This interaction paradigm includes various components such as voice recognition, natural language understanding (NLU), and speech synthesis. Voice recognition technology captures spoken words and converts them into text. Natural language understanding then interprets this text to understand user intent, while speech synthesis generates a spoken response from the system.

The benefits of Voice User Interfaces for businesses and users are substantial. For businesses, Voice User Interfaces offers an opportunity to enhance customer engagement, streamline operations, and gain a competitive edge. For users, VUI provides a hands-free, efficient, and often more accessible way to interact with technology. This is particularly beneficial in scenarios where manual interaction is impractical or impossible, such as driving or cooking.

Current trends in Voice User Interfaces development include the integration of artificial intelligence (AI) to improve the accuracy and context-awareness of voice interactions. Technologies such as machine learning and deep learning are being used to refine voice recognition and natural language processing, making VUI systems more responsive and intuitive. Additionally, the proliferation of smart devices, from speakers to home appliances, has expanded the reach and potential applications of Voice User Interfaces.

Case Study 1: Apple Siri

Apple’s Siri, introduced in 2011 with the iPhone 4S, was one of the first widely adopted Voice User Interfaces (VUI) in the consumer market. Initially, Siri was designed to perform basic tasks such as setting reminders, sending messages, and answering simple queries. Over the years, Siri has undergone significant evolution, with continuous improvements in natural language processing (NLP), voice recognition, and integration within Apple’s ecosystem.

Evolution of Apple Siri

Early Development and Introduction (2011-2015)

Siri’s debut marked a significant milestone in VUI technology. Initially, Siri’s capabilities were limited, focusing on straightforward tasks like setting alarms, making phone calls, and providing weather updates. During this phase, Siri’s voice recognition accuracy and natural language understanding were in their nascent stages. Apple’s primary goal was to introduce users to the concept of interacting with their devices through voice commands, paving the way for future advancements.

Expansion and Integration (2016-2019)

As user expectations grew, so did Siri’s capabilities. Apple began integrating Siri more deeply into its ecosystem, allowing for more complex interactions. This period saw the introduction of new features such as Siri Shortcuts, which enabled users to create custom voice commands for specific tasks. Siri also became a central feature in Apple’s HomePod, providing a voice-controlled smart speaker experience.

During this time, Apple focused on improving Siri’s language support, expanding its capabilities to recognize and understand multiple languages and dialects. This expansion was crucial for increasing Siri’s global user base and making the technology more accessible to non-English speaking users.

Enhancements in AI and Machine Learning (2020-2023)

The integration of advanced AI and machine learning techniques marked a significant leap in Siri’s evolution. Apple began leveraging these technologies to enhance Siri’s contextual understanding and response accuracy. The use of neural networks improved Siri’s ability to handle more complex queries and engage in more natural, conversational interactions with users.

A notable enhancement was the on-device processing of voice commands. By processing more data locally on the device, Apple improved Siri’s response times and reduced reliance on cloud-based processing, addressing privacy concerns and enhancing user trust.

Integration with iOS Ecosystem and Devices

Siri’s integration within Apple’s ecosystem is a cornerstone of its utility. Users can access Siri across multiple devices, creating a seamless experience. For instance, Siri can hand off tasks between an iPhone and a Mac, or control smart home devices via HomeKit. This tight integration allows users to perform actions like sending messages, playing music, setting reminders, and controlling smart home devices with voice commands, regardless of which Apple device they are using.

Enhancements in Voice Recognition and AI Capabilities

With the advent of ChatGPT-4.0, Apple has made significant strides in enhancing Siri’s AI capabilities. The integration of advanced AI models has improved Siri’s ability to understand context, process complex queries, and provide more accurate and relevant responses. The key enhancements include:

- Improved Natural Language Understanding: Leveraging ChatGPT-4.0’s advanced NLP capabilities, Siri can now handle more nuanced and conversational queries, understanding context and intent better than before.

- Contextual Awareness: Siri can maintain context across multiple interactions, providing more coherent and contextually relevant responses.

- Enhanced Voice Recognition: The integration of machine learning algorithms has refined Siri’s ability to accurately recognize and process diverse accents, dialects, and speech patterns.

Insights and Learnings from Apple’s Approach to Voice User Interfaces

Apple’s approach to VUI offers several valuable insights:

- Prioritizing User Privacy: Apple’s commitment to processing voice data on-device highlights the importance of prioritizing user privacy in VUI development.

- Ecosystem Integration: A seamless and interconnected user experience across devices can significantly enhance the utility and appeal of VUI.

- Continuous Improvement: Regular updates and refinements, driven by user feedback and advancements in AI, are crucial for maintaining a high standard of functionality and user satisfaction.

Challenges Faced and Solutions Implemented

Despite its success, Siri has faced several challenges:

- Accuracy of Voice Recognition: Early versions of Siri struggled with accurately recognizing diverse accents and speech patterns. Apple addressed this by integrating more advanced machine learning models and expanding its training datasets.

- Privacy Concerns: Concerns about data privacy have been a persistent challenge. Apple’s solution was to process more voice data on-device and implement robust encryption measures to protect user information.

- Keeping Up with Competitors: With strong competition from other Voice User Interfaces providers like Google Assistant and Amazon Alexa, Apple has had to continuously innovate. The integration of ChatGPT-4.0 is a testament to Apple’s commitment to staying at the forefront of Voice User Interfaces technology.

Case Study 2 – Amazon Alexa

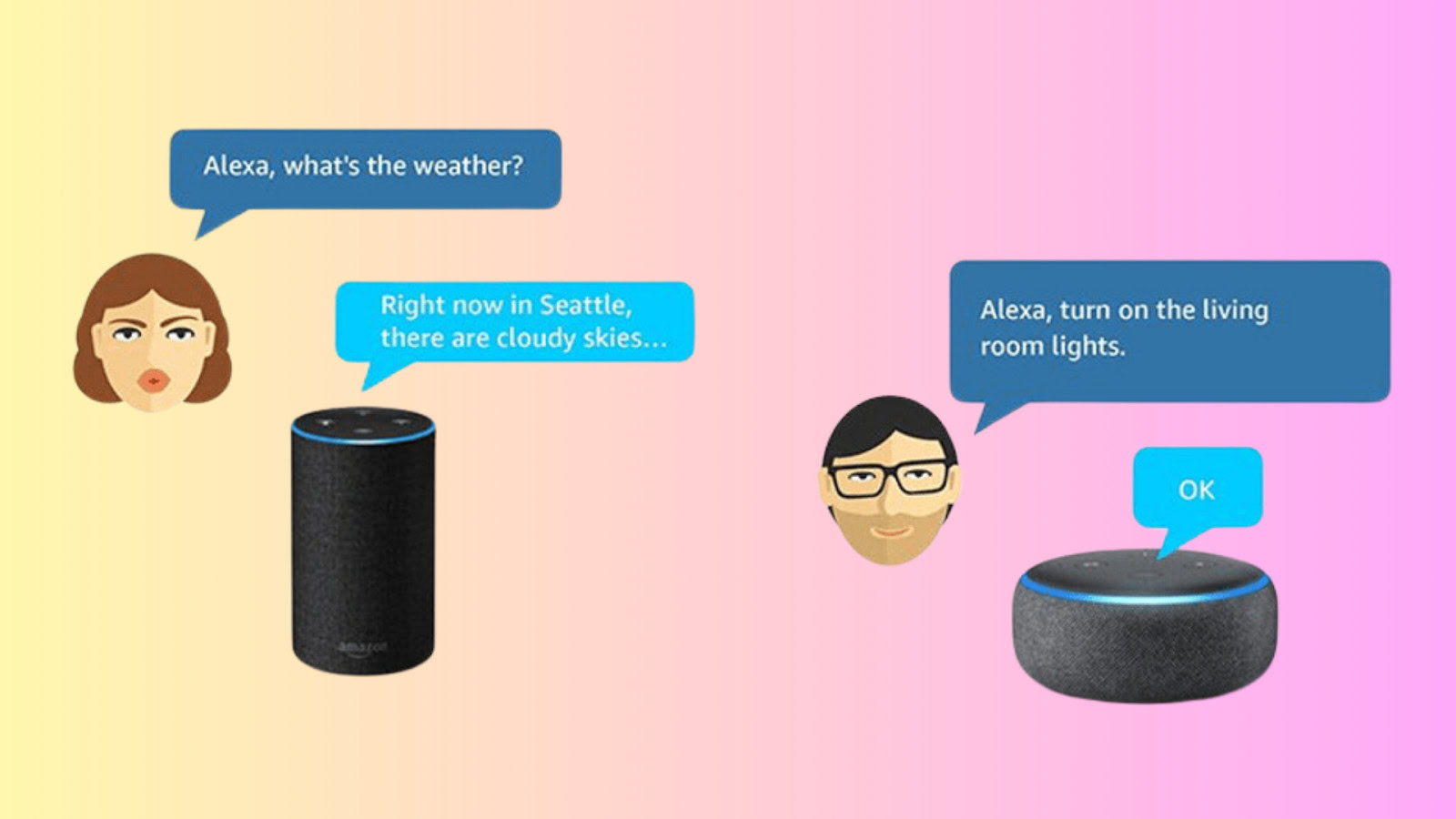

Amazon Alexa, launched in November 2014, is a virtual assistant developed by Amazon. Initially integrated into the Amazon Echo smart speaker, Alexa was designed to provide users with hands-free voice interaction, music playback, setting alarms, and other real-time information. Over the years, Alexa has evolved significantly, becoming a central hub for smart home automation, integrating with various devices and services, and expanding its capabilities through continuous updates and the introduction of Alexa Skills.

Implementation Strategies Used by Amazon for Alexa

Amazon employed several key strategies in the development and implementation of Alexa:

- Open Ecosystem: Amazon adopted an open ecosystem approach, allowing third-party developers to create and publish Alexa Skills. This strategy significantly expanded Alexa’s functionality and appeal.

- Continuous Improvement: Amazon continuously updates Alexa’s software, enhancing its natural language processing capabilities, improving its understanding of user intents, and adding new features.

- Strategic Partnerships: Amazon partnered with numerous companies to integrate Alexa into a wide range of products, from smart speakers to household appliances and automobiles.

- Developer Support: Amazon provides robust support to developers through comprehensive documentation, tools, and resources, encouraging the creation of a diverse range of Alexa Skills.

Integration with Smart Home Devices

Alexa’s ability to integrate with smart home devices is one of its most compelling features. Amazon has worked closely with manufacturers to ensure compatibility with a wide array of smart home products, such as lights, thermostats, locks, and security cameras. The introduction of the Alexa Smart Home Skill API allows developers to create custom interactions for their devices, making it easier for users to control their smart homes through voice commands.

Development of Alexa Skills

The development of Alexa Skills is a cornerstone of Amazon’s strategy to expand Alexa’s capabilities. Skills are voice-driven capabilities that enhance the functionality of Alexa, similar to apps on a smartphone. Developers use the Alexa Skills Kit (ASK) to create these Skills, which can range from providing information and entertainment to controlling smart home devices and facilitating transactions.

Insights and Learnings from Amazon’s Approach to Voice User Interfaces

Amazon’s approach to VUI (Voice User Interface) has provided several valuable insights:

- User-Centric Design: Amazon prioritizes user experience, ensuring that interactions with Alexa are intuitive and natural. This user-centric approach has been crucial in driving adoption and engagement.

- Scalability and Flexibility: The open ecosystem and developer support have allowed Alexa to scale rapidly and adapt to various use cases, making it a versatile platform.

- Continuous Innovation: Amazon’s commitment to innovation, through regular updates and new features, has kept Alexa at the forefront of the VUI market.

Challenges Faced and Solutions Implemented

Despite its success, Amazon has faced several challenges in the development and deployment of Alexa:

- Privacy Concerns: Users have expressed concerns about privacy and data security. Amazon has implemented stringent privacy policies, introduced features like the ability to delete voice recordings, and improved transparency to address these concerns.

- Voice Recognition Accuracy: Achieving high accuracy in voice recognition, especially in noisy environments or with diverse accents, has been challenging. Amazon has invested in advanced machine learning algorithms and extensive training datasets to enhance Alexa’s accuracy.

- Integration Complexity: Ensuring seamless integration with a wide range of smart home devices posed significant technical challenges. Amazon addressed this by developing robust APIs and providing extensive support to developers and manufacturers.

Overall, Amazon’s strategic approach, commitment to innovation, and focus on user experience have positioned Alexa as a leading voice assistant, driving the adoption of Voice User Interfaces across various industries and households.

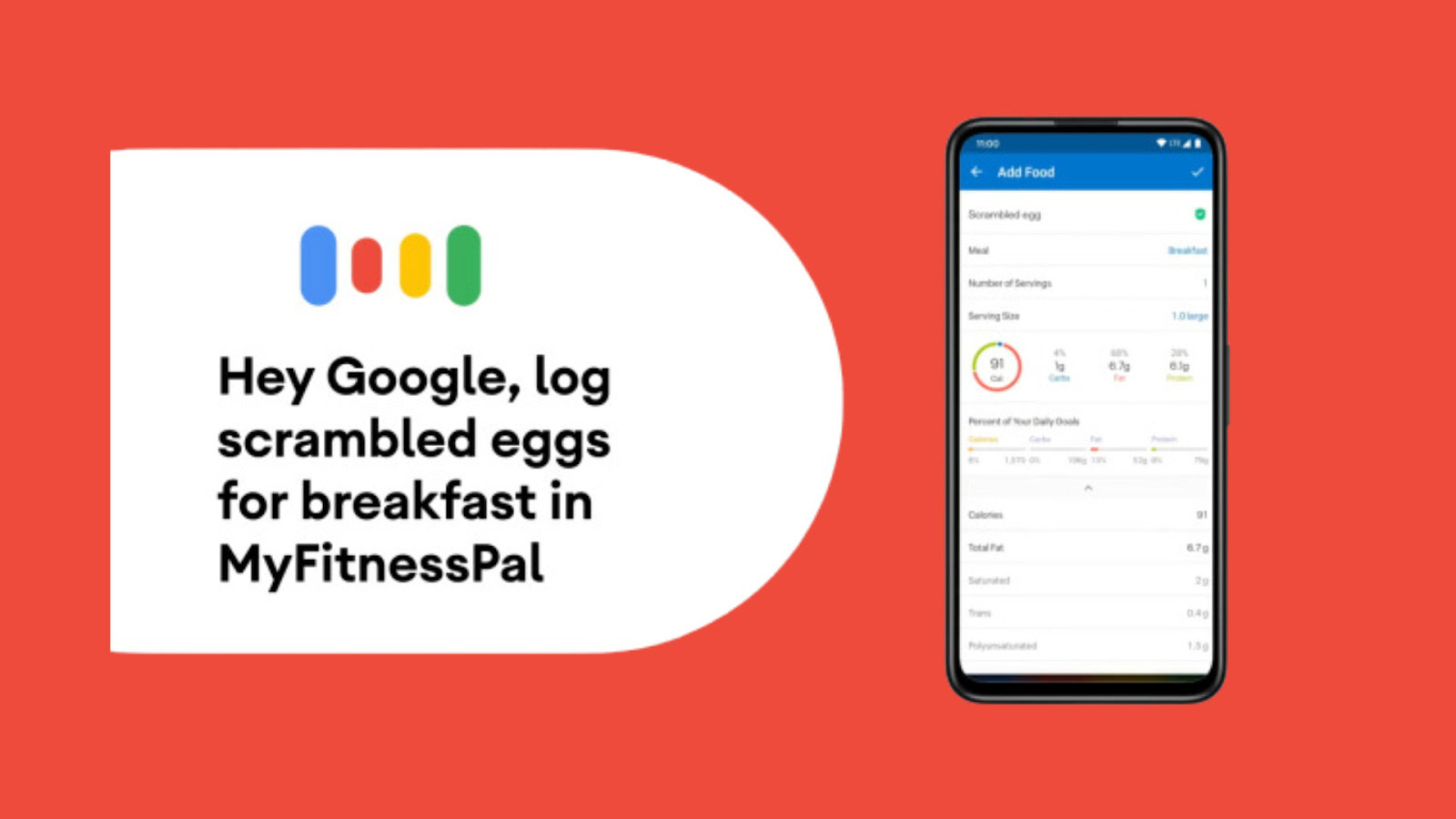

Case Study 3 – Google Assistant

Google Assistant, launched in May 2016, is a virtual assistant powered by artificial intelligence and developed by Google. Initially introduced as part of the Google Allo messaging app and Google Home smart speaker, Google Assistant has evolved into a ubiquitous service available on smartphones, smart displays, wearables, and more. Leveraging Google’s extensive knowledge graph, it provides users with personalized assistance, answering questions, managing tasks, and controlling smart home devices.

Implementation Strategies Used by Google for Assistant

Google employed several key strategies to implement Google Assistant effectively:

- Deep Integration with Google Services: Google Assistant is deeply integrated with Google’s ecosystem, including Google Search, Gmail, Calendar, Maps, and more. This allows for seamless access to a wealth of information and services.

- Contextual Awareness: Google has focused on making Assistant contextually aware, enabling it to understand and respond appropriately to follow-up questions and conversations.

- Cross-Platform Availability: Google Assistant is available across a wide range of devices and platforms, including Android and iOS smartphones, smart speakers, smart displays, and wearables.

- Developer Ecosystem: Google has cultivated a robust developer ecosystem, providing tools and resources for third-party developers to create Actions on Google, which enhance Assistant’s functionality.

Integration with Google Services and Third-Party Apps

Google Assistant’s integration with Google services provides a significant advantage, allowing it to leverage data from Google Search, Maps, Calendar, and more to deliver personalized and contextually relevant information. Additionally, Google Assistant integrates with numerous third-party apps and services, enabling users to perform tasks such as booking rides, ordering food, and controlling smart home devices through voice commands.

Use of Natural Language Processing and Machine Learning

Google Assistant utilizes advanced natural language processing (NLP) and machine learning (ML) techniques to understand and respond to user queries. Key components include:

- BERT and Transformer Models: Google employs cutting-edge NLP models like BERT (Bidirectional Encoder Representations from Transformers) to improve language understanding and context recognition.

- Speech Recognition: Google’s speech recognition technology is highly accurate, capable of understanding diverse accents and languages, and improving over time with continuous learning.

- Personalization: Machine learning algorithms enable Google Assistant to learn user preferences and provide personalized responses and recommendations.

Insights and Learnings from Google’s Approach to Voice User Interfaces

Google’s approach to VUI has provided several valuable insights:

- Comprehensive Ecosystem Integration: Deep integration with Google’s services and third-party apps enhances user experience and utility, making Assistant a central hub for various tasks.

- Focus on Context and Continuity: By prioritizing contextual awareness and the ability to handle follow-up questions, Google has improved the conversational capabilities of Assistant.

- Leveraging AI and ML: Continuous advancements in AI and ML have been crucial in refining the natural language understanding and personalization capabilities of Google Assistant.

Challenges Faced and Solutions Implemented

Despite its success, Google Assistant has encountered several challenges:

- Privacy and Data Security: Concerns about privacy and data security are prevalent among users. Google has implemented robust privacy controls, including the ability to manage and delete voice recordings and providing transparency about data usage.

- Complexity of Natural Language Understanding: Understanding nuanced human language and context poses significant challenges. Google continues to invest in research and development to improve NLP capabilities and contextual understanding.

- Competition and Differentiation: The competitive landscape for virtual assistants requires continuous innovation and differentiation. Google focuses on leveraging its extensive data and AI capabilities to maintain a competitive edge.

Overall, Google’s strategic approach, integration with its comprehensive ecosystem, and continuous advancements in AI and ML have positioned Google Assistant as a leading voice assistant, driving the adoption of Voice User Interfaces technology and enhancing user experiences across various devices and platforms.

Case Study 4 – Samsung Bixby

Background on Samsung Bixby and Its Evolution

Samsung Bixby, introduced in March 2017, is a virtual assistant developed by Samsung Electronics. Initially launched alongside the Samsung Galaxy S8 and S8+ smartphones, Bixby was designed to provide a more seamless and intuitive way for users to interact with their devices. Bixby integrates deeply with Samsung’s ecosystem, including smartphones, smart TVs, home appliances, and wearable devices. Over time, Samsung has continued to enhance Bixby’s capabilities, focusing on improving its contextual understanding and expanding its functionalities.

Implementation Strategies Used by Samsung for Bixby

Samsung employed several strategies to implement Bixby effectively:

- Deep Device Integration: Bixby is deeply integrated into Samsung’s devices, allowing it to control device settings, access apps, and perform complex tasks.

- Comprehensive Features: Samsung designed Bixby to handle a wide range of tasks, from voice commands and visual search to text and image recognition.

- Samsung Developer Program: Samsung encouraged third-party developers to create Bixby Capsules (similar to Alexa Skills) to extend Bixby’s functionality through the Bixby Developer Studio.

- Focus on Natural Language: Samsung emphasized natural language processing to enable Bixby to understand and execute user commands effectively.

Integration with Samsung Devices and Services

Bixby’s integration with Samsung’s ecosystem is one of its strongest features. It is compatible with a wide array of Samsung devices, including:

- Smartphones and Tablets: Bixby is pre-installed on Samsung Galaxy smartphones and tablets, providing voice-activated control over device settings and apps.

- Smart TVs: Bixby can control Samsung Smart TVs, allowing users to search for content, adjust settings, and navigate menus using voice commands.

- Home Appliances: Integration with Samsung’s home appliances, such as refrigerators, washing machines, and air conditioners, enables users to manage their smart homes through voice commands.

- Wearable Devices: Bixby is also available on Samsung’s wearable devices, such as smartwatches, enhancing hands-free interaction and convenience.

Focus on Contextual Understanding and Personalized Responses

Samsung has focused on improving Bixby’s contextual understanding and ability to provide personalized responses:

- Context Awareness: Bixby can understand the context of user interactions, allowing for more natural and continuous conversations.

- Personalization: Bixby learns user preferences and habits over time, offering personalized recommendations and responses based on individual usage patterns.

- Bixby Vision: This feature uses the device’s camera to provide information about the surroundings, recognize objects, and translate text, enhancing the assistant’s contextual capabilities.

Insights and Learnings from Samsung’s Approach to Voice User Interfaces

Samsung’s approach to Voice User Interfaces with Bixby has provided several valuable insights:

- Device-Centric Integration: Deep integration with Samsung devices enhances user experience by providing seamless control over various device functionalities.

- Contextual Intelligence: Emphasizing contextual understanding and personalization improves the relevance and accuracy of responses, making interactions more intuitive.

- Developer Engagement: Encouraging third-party developers to create Bixby Capsules has expanded Bixby’s capabilities and enriched the overall ecosystem.

Challenges Faced and Solutions Implemented

Despite its integration and features, Bixby has faced several challenges:

- Market Competition: Competing with established voice assistants like Amazon Alexa and Google Assistant has been challenging. Samsung continues to enhance Bixby’s unique features and leverage its device ecosystem to differentiate itself.

- User Adoption: Encouraging users to adopt Bixby over other assistants available on Samsung devices (such as Google Assistant) has been difficult. Samsung has focused on improving Bixby’s capabilities and promoting its advantages.

- Language and Accent Recognition: Achieving high accuracy in understanding diverse languages and accents has been a technical challenge. Samsung has invested in improving Bixby’s NLP capabilities to address this.

Overall, Samsung’s strategic integration of Bixby within its ecosystem, focus on contextual and personalized responses, and efforts to engage developers have been key to its approach to Voice User Interfaces. Continuous enhancements and addressing challenges will be crucial for Bixby’s future growth and user adoption.

Future Trends in Voice User Interfaces

The field of Voice User Interfaces (VUI) is rapidly evolving, driven by emerging technologies and innovative approaches. One significant trend is the advancement in natural language processing (NLP) and artificial intelligence (AI), which are making voice assistants more intuitive and capable of understanding complex user commands. The integration of AI with Voice User Interfaces is expected to enable more personalized and context-aware interactions, providing users with more relevant and timely responses. Additionally, the incorporation of multimodal interactions, where voice is combined with visual and tactile inputs, is set to enhance user experiences, particularly in smart home and automotive applications.

In the future, Voice User Interfaces is predicted to transform various industries significantly. In the healthcare sector, voice assistants could play a crucial role in patient monitoring, providing medical advice, and managing appointments. The retail industry is likely to see increased adoption of Voice User Interfaces for customer service, personalized shopping experiences, and hands-free transactions. In the automotive industry, Voice User Interfaces will continue to improve in-car navigation, entertainment, and control systems, making driving safer and more enjoyable. The workplace is another area where Voice User Interfaces can enhance productivity by facilitating hands-free operation of devices, scheduling meetings, and managing workflows.

For businesses considering Voice User Interfaces implementation, several recommendations can be made. It is essential to invest in robust NLP and AI technologies to ensure accurate and responsive voice interactions. Businesses should focus on creating seamless integrations with their existing digital ecosystems to provide a cohesive user experience. Additionally, it is crucial to address privacy and security concerns by implementing stringent data protection measures. Engaging with users to understand their needs and preferences can also help in designing more effective Voice User Interfaces solutions. Lastly, businesses should consider the scalability of their Voice User Interfaces solutions to accommodate future advancements and growing user demands.

Conclusion

In conclusion, the exploration of Voice User Interfaces by leading brands like Amazon, Google, and Samsung has provided valuable insights into the potential and challenges of Voice User Interfaces. These brands have demonstrated the importance of deep integration, contextual understanding, and continuous innovation in driving user adoption and engagement. While Voice User Interfaces offers significant opportunities, it also presents challenges such as privacy concerns, language recognition accuracy, and market competition. However, the potential benefits of Voice User Interfaces in enhancing user experiences, improving accessibility, and streamlining operations make it a compelling area for businesses to explore. Encouragement for businesses to delve into Voice User Interfaces opportunities is well-founded, as embracing this technology can lead to innovative solutions and a competitive edge in the rapidly evolving digital landscape.

Take your company to the next level and get results with our world class user experience, interface design and implementation.

Get a FREE 30 min Strategy Session

Related posts

My Spotify: Everything You Need to Know About Spotify’s Latest Personalization Feature

Spotify, the leading music streaming service, has once again pushed the boundaries of personalized music experiences with the introduction of […]

Stay ahead in SaaS: How to out UX your competitors

In today’s fiercely competitive SaaS market, understanding customer intent is more than just a strategy—it’s a necessity. With countless options […]

Why Pinterest’s Personalization Strategy Could Revolutionize Digital Discovery

Have you ever opened Pinterest and felt like the platform knows exactly what you want—before you even realize it yourself? […]

Creative product design that gets results

Take your company to the next level with world class user experience and interface design.

get a free strategy session